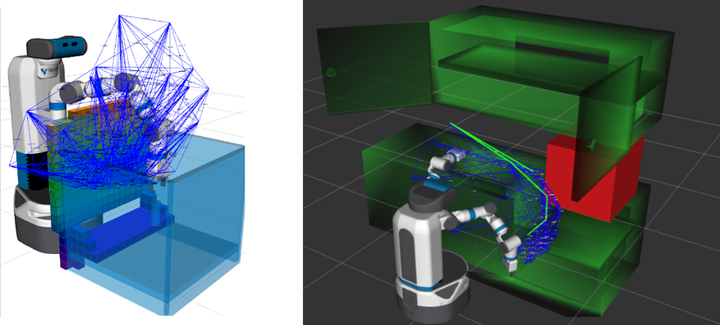

(Left) The Fetch robot planning a motion to pick up an object inside the box where only part of the box (colored voxels) is visible. (Right) Fetch robot planning its motion to move the hand from the oven to the counter top while avoiding the (not observable) heat coming from the stove.

(Left) The Fetch robot planning a motion to pick up an object inside the box where only part of the box (colored voxels) is visible. (Right) Fetch robot planning its motion to move the hand from the oven to the counter top while avoiding the (not observable) heat coming from the stove.

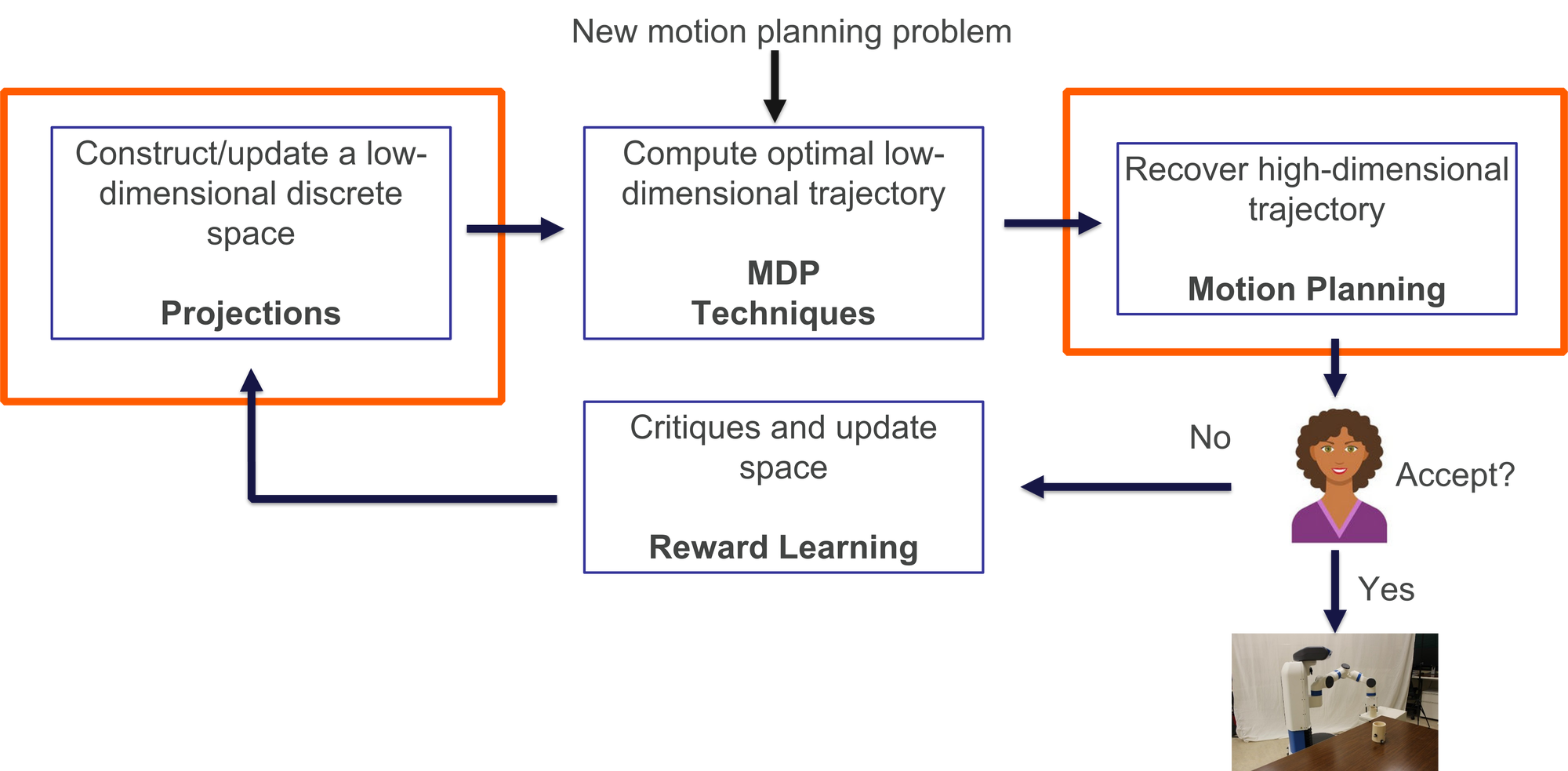

This project is concerned with the problem of motion planning for high-DOF robots under partial observability using guidance from humans. We have proposed Bayesian Learning in the Dark (BLIND), an algorithm that leverages the human senses to compute high-DOF robot trajectories that are safe despite partial observability of the environment. The main components that made up BLIND are shown next

The construction of a low-dimensional discrete state space and the guided motion planner are the two main novelties within BLIND.

The former leverages projections and sampling-based motion planners to create a space where reward learning can be performed. The latter uses optimization-based motion planning to incorporate the knowledge learned from the human as motion constraints.

These two together enable reward learning techniques to be used using critiques to learn and compute high-dimensional safe trajectories despite the incomplete environmental information.

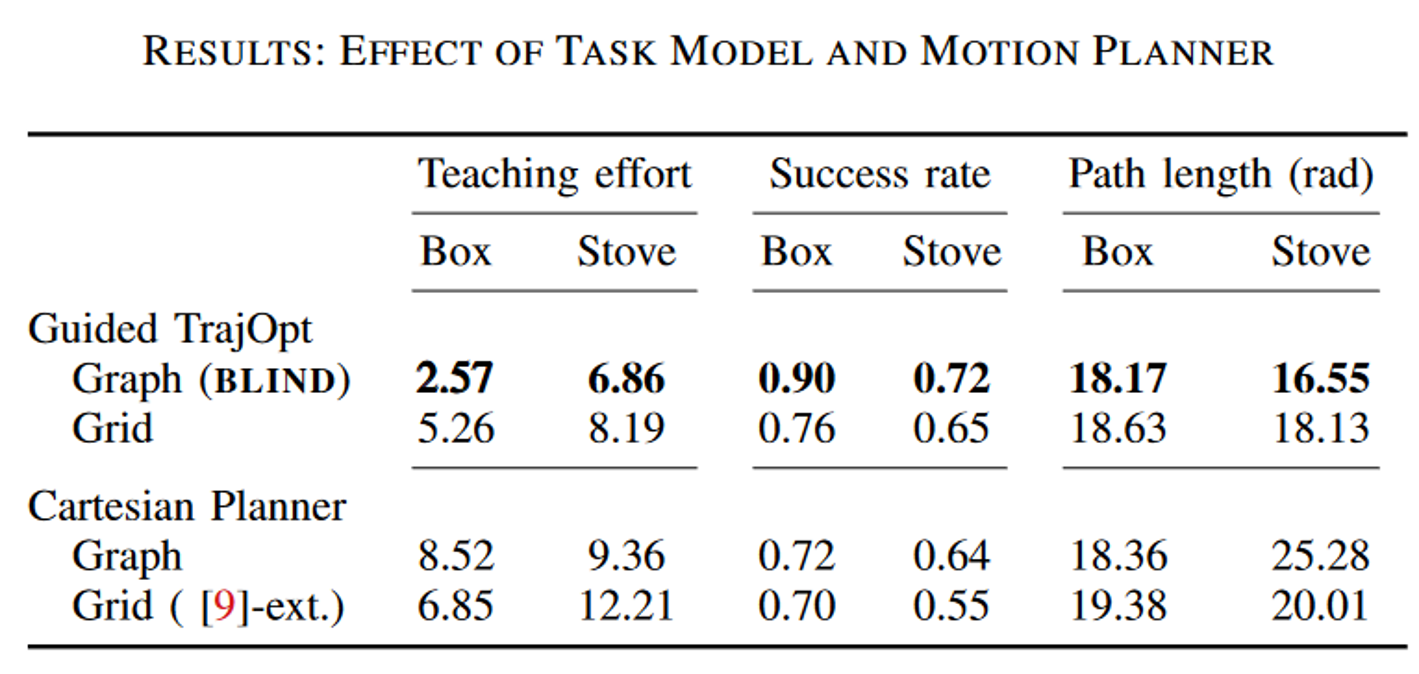

The results show that BLIND outperforms state-of-the-art methods in teaching effort, success rate and path length.

Check out our video of a robot implementation of BLIND, where a human user is asked to critique trajectories that are potentially unsafe. BLIND is executed in real-time and it converges to a safe solution.